Notes on Instrumental Variables

Here are my notes on instrumental variables from Stefan’s lecture materials.

Motivation

How can we identify causal effects

One popular way is to find and use instrumental variables.

Partially Linear IV Models

When instrumental variables are available, it becomes possible to point identify causal effects in partially linear models and certain types of causal effects in nonlinear models.

Here we begin with partially linear models.

This is a semiparametric specification, in that we impose a linear relationship between

When the constant treatment effect model (PLM) doesn’t hold, the average treatment effect

Identifying assumptions

There are 3 identification assumptions. Or let’s say there are three main assumptions that must be satisfied for a variable

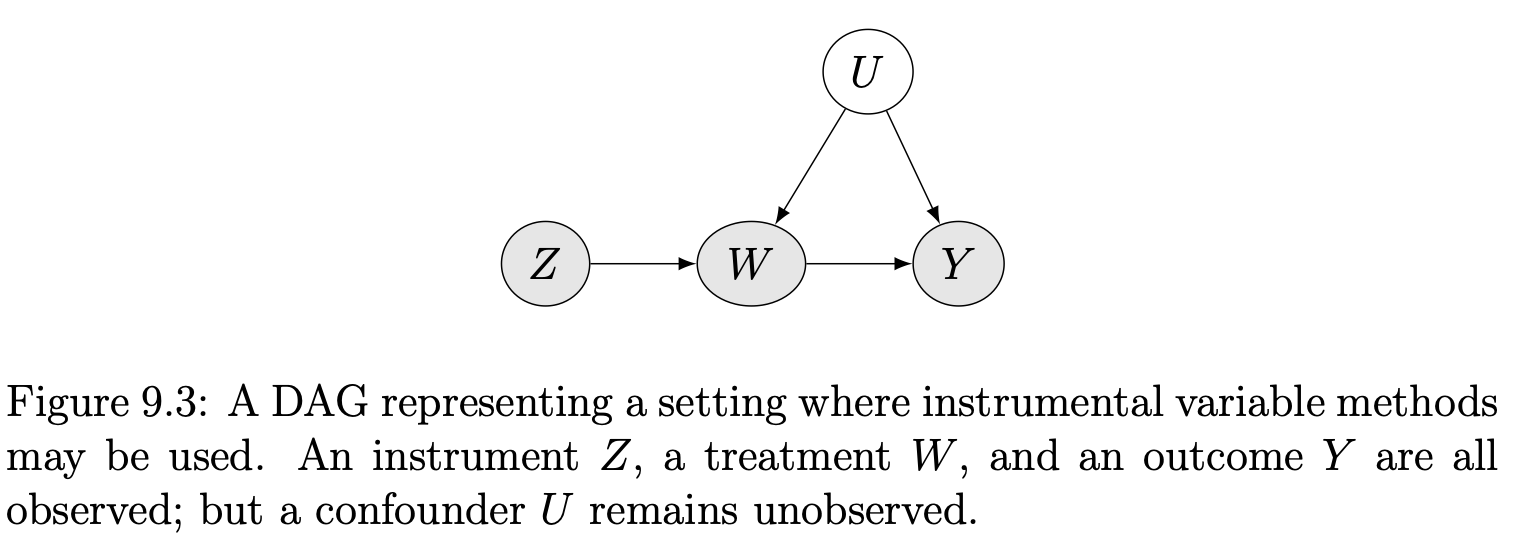

Graphically, the relevance assumption corresponds to the existence of an active edge from

Graphically, this means that we’ve excluded enough potential edges between variables in the causal graph so that all causal paths from

Case I (Easiest)

Consider the fully linear version,

Then,

It implies,

Case II (More general: optimal instruments)

Case I assumes (1) linear relationship between

-

we have multiple instruments

-

or we believe that the instrument may act non-linearly

Consider the following,

where

Then by the same argument as the Case I (note: we can regard

provided the denominator is non-zero, resulting a feasible estimator

What is the best function

How to do we estimate? Do cross-fitting! Why? Because

We no longer have

Case III (Much more general: non-parametric IV regression)

The more general version than Case II is the following,

where

-

(C3) still requires the effect of

-

however, unlike (C2), it now allows this additive effect to be modified by a non-linearity

Now,

There are two steps for learning

-

non-parametric model

-

estimate

For more details, see Section 9.2 in Stefan’s lecture.

Local Average Treatment Effects

Motivation

-

IV without the linearity assumption: One may doubt the validity the linearity and constant treatment effect assumption in previous section. What about non-parametric identification using IV?

-

Encouragement Design and Noncompliance: Noncompliance is a common problem in encouragement designs involving human beings as experimental units. In those cases, the experimenters cannot force the units to take the treatment but rather only encourage them to do so. Heterogeneous effects should be allowed.

Setup

Consider an randomized experiment,

-

Let

-

Let

-

When

-

Potential outcome

-

Potential outcome

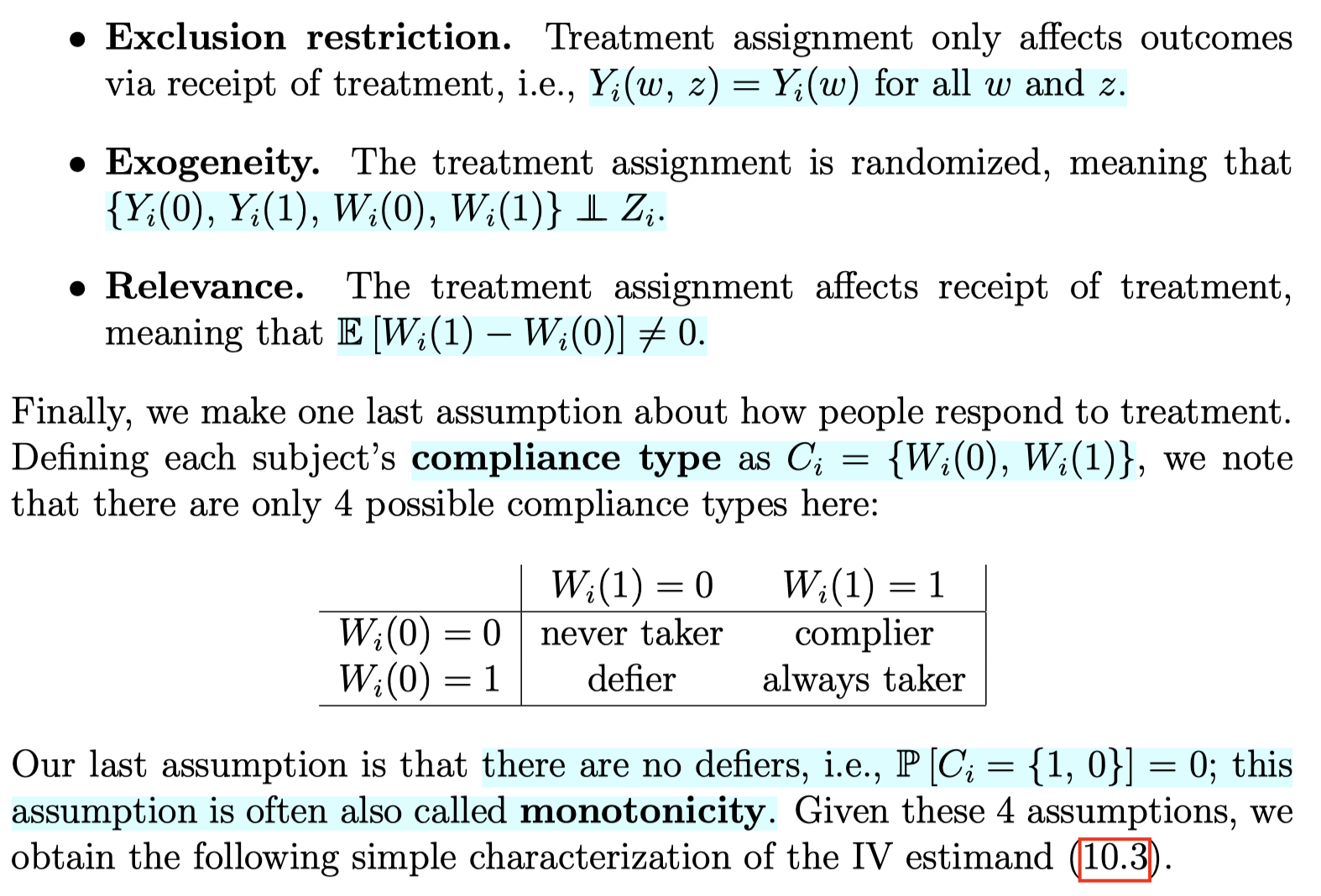

Identifying assumptions

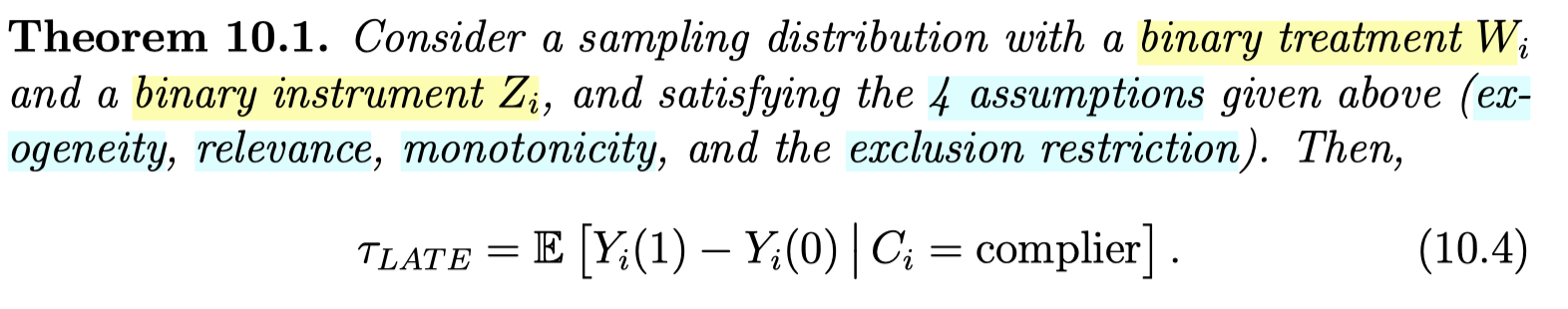

LATE Theorem

Idea of proof:

-

Start with

-

Derive

-

Decompose the ATE on

-

By exclusion restriction and monotonicity assumption, only “compiler” remains

Multiple instruments

We may have access to data from multiple randomized trials that can be used to study a treatment effect via a non-compliance analysis.

Marketing example:

-

goal: study the effect of subscription to a loyalty program (

-

randomized trial 1: offering discounts for joining the loyalty program

-

randomized trial 2: showing advertisements

Previously, under the linear treatment effect model, multiple instruments could be combined into a single optimal instrument, and the optimal instrument corresponds to the summary of all the instruments that best predicts the treatment.

Without the linear treatment effect model, however, we caution that no such result is available. Different instruments may induce difference compliance patterns, and so the LATEs identified different instruments may not be the same.

In the marketing example, the ATE for customers who respond to a discount may be different from the ATE for customers who respond to an advertisement.

Reference

Wager, S. (2024). Causal inference: A statistical learning approach. https://web.stanford.edu/~swager/causal_inf_book.pdf