From Donsker Classes to Neyman Orthogonality: The Power of DML

Motivation & Intuition

In classical semiparametric theory, we want to estimate a low‑dimensional target parameter (say, a treatment effect) while controlling for high‑dimensional nuisance functions (like nonparametric regressions). However, in order to use the central limit theorem (CLT) and to characterize the asymptotic behavior, classical results require that the space of functions in which these nuisance functions lie is “small” in a technical sense. In particular, they must form a Donsker class — roughly speaking, a collection of functions whose complexity (measured via “entropy”) is bounded enough so that the empirical process converges to a Gaussian process. This condition, however, is too restrictive in modern applications where the nuisance functions are estimated by flexible machine learning methods (e.g., random forests, boosting, deep neural nets) that may come from very large, high‑dimensional spaces.

The double machine learning approach overcomes this problem by using Neyman orthogonal scores. The key idea is that the moment functions used to estimate the target parameter are constructed in such a way that small errors in estimating the nuisance functions have only a second-order effect on the final estimator. In plain language, even if your machine learning methods are “messy” or come from huge function classes (i.e. they do not satisfy the Donsker conditions), the estimation of your target parameter remains robust as long as the nuisance estimators converge at a certain rate. Moreover, the approach uses sample splitting (or cross‑fitting) to avoid overfitting biases.

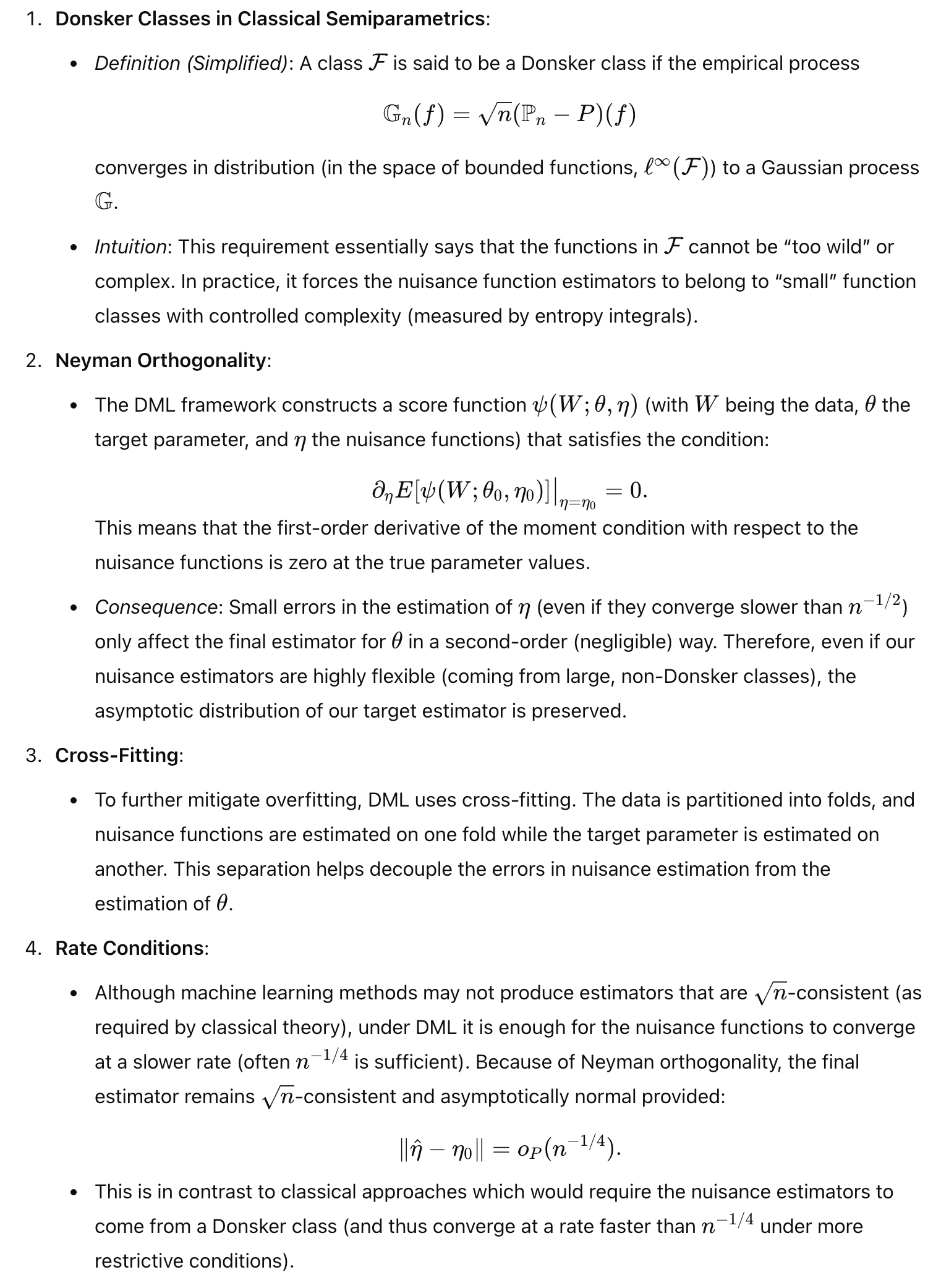

Key Math Details

Summary

Flexibility in Nuisance Estimation: DML frees us from the need for nuisance estimators to lie in “small” Donsker classes. Thanks to Neyman orthogonality and cross-fitting, we can plug in flexible, machine-learning based nuisance estimates—even if they come from very rich function spaces—without contaminating the asymptotic distribution of the target parameter estimator.

Thus, the contribution of DML is in allowing the use of complex, modern ML methods to estimate nuisance functions while still obtaining valid inference for the target parameter, bypassing the traditional, more restrictive Donsker conditions.