AIPW vs. Residual-on-Residual regression: Non-Parametric Flexibility or Efficiency?

Introduction

Two powerful tools in causal inference are the Augmented Inverse Propensity Weighting (AIPW) estimator and the Residual-on-Residual regression estimator for partially linear models. Drawing from Wager’s notes (2024), this post breaks down how these estimators work, compares their strengths and weaknesses, and offers tips for when to use each.

Residual-on-Residual regression

The semiparamteric partially linear model (PLM) assumes the outcome

Here,

-

Partialing out covariates: “Partialing out”

-

Estimate the effect: Use linear regression

Augmented Inverse Propensity Weighting (AIPW) Estimator

AIPW takes a fully non-parametric approach, aiming to estimate ATE,

Here,

The Key Difference: Non-Parametric vs. Partially Linear

AIPW is fully non-parametric, imposing no specific parametric form on the treatment effect, while residual-on-residual regression estimator assumes a partially linear structure

-

AIPW’s flexibility:

-

AIPW does not assume a specific parametric form!

-

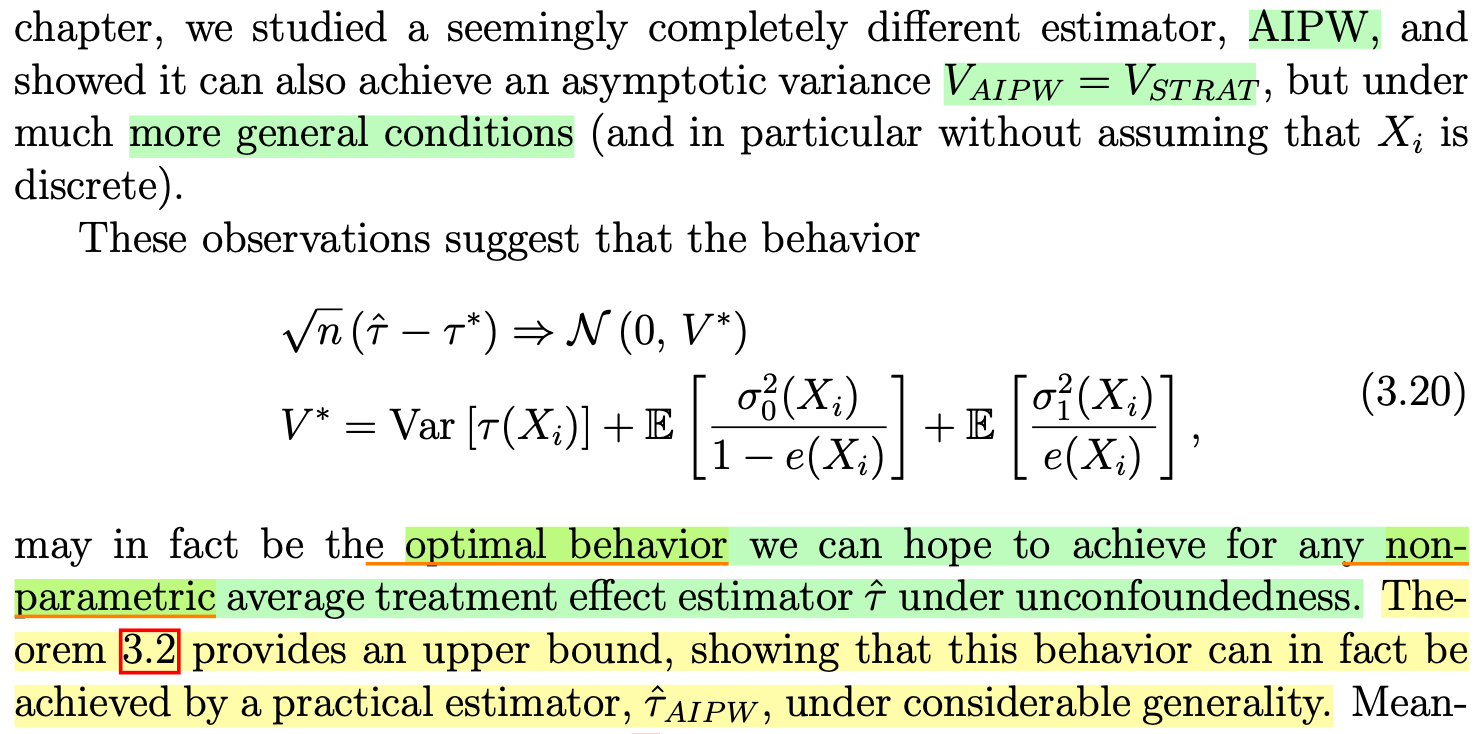

AIPW is efficient in the generic non-parametric setting.

-

-

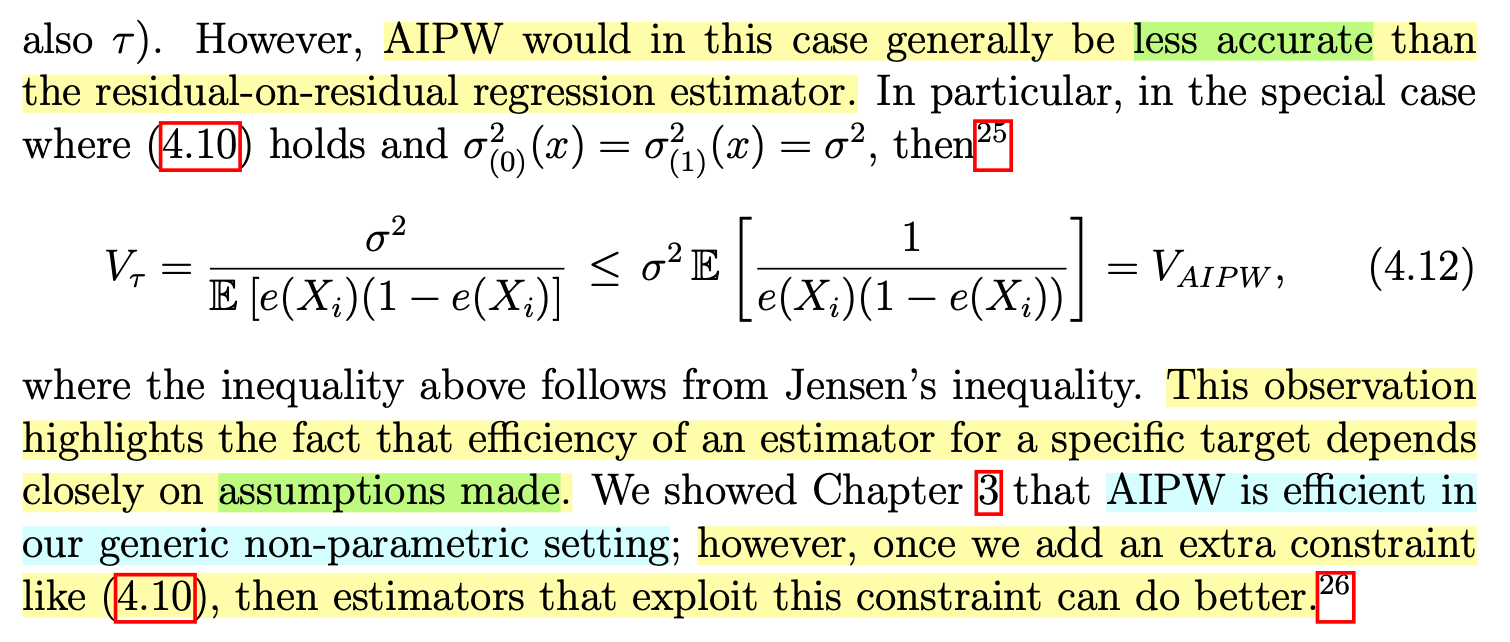

Residual-on-residual regression’s structure: What partially linear assumption buys us is that residual-on-residual estimators that exploit this constraint can have smaller variance than AIPW. In other words, adding this additional structure makes the residual-on-residual estimator more efficient than AIPW.

A risk of using the residual-on-residual estimator is that constant treatment effect model (1) may be misspecified.

A risk of using the residual-on-residual estimator is that constant treatment effect model (1) may be misspecified.

Why This Matters

The choice between AIPW and residual-on-residual regression reflects a deeper trade-off in causal inference: flexibility versus efficiency. AIPW’s non-parametric nature makes it a Swiss Army knife for complex data, while PLM structure is like a precision tool—effective when conditions are right.

As Wager’s notes highlight:

-

Both AIPW and residual-on-residual regression are Neyman-orthogonal, making them robust to first‐stage errors.

-

However, their assumptions shape their performance. AIPW attains the lowest possible asymptotic variance for ATE under unconfoundedness. The residual-on-residual estimator, by imposing extra structure, can go beyond that bound when its structure is correct but at the cost of vulnerability to misspecification.

Conclusion

AIPW’s fully non-parametric approach offers robustness and flexibility, while residual-on-residual regression’s partially linear structure prioritizes efficiency when assumptions hold.

Reference

Wager, S. (2024). Causal inference: A statistical learning approach. https://web.stanford.edu/~swager/causal_inf_book.pdf