A walkthrough of how Causal Forest 🌲 works

Introduction

In this post, I will go over how causal forest works based on the tutorial in grf R package. Causal Forests offer a flexible, data-driven approach to estimating varied treatment effects, bridging machine learning and causal inference techniques.

Common Setting

If we are working on an observational study, we have the following data:

-

Outcome variable:

-

Binary treatment indicator:

-

A set of covariates:

Let’s assume that the following conditions hold:

- (Assumption 1)

-

(Assumption 2) The confounders

-

(Assumption 3) The treatment effect

Then we could run a regression of the type

and interpret the estimate of

Relaxing Assumptions

-

Assumption 1 is an “identifying” assumption we have to live with

-

Assumption 2 and Assumption 3 are modeling assumptions that we can question.

Relaxing Assumption 2: Partially Linear Model (PLR)

Assumption 2 is a strong parametric modeling assumption that requires the confounders to have a linear effect on the outcome, and that we should be able to relax by relying on semi-parametric statistics.

We can instead posit the partially linear model:

How do we get around estimating

Define the propensity score as

and the conditional mean of

By Robinson (1988), we can rewrite the above equation in “centered” form:

This formulation has great practical appeal, as it means

Good properties 😀: Robinson (1988) shows that this approach yields root-n consistent estimates of

But how to estimate

- Use modern machine learning models! One could use boosting, random forest, and etc to estimate

-

Issue with Direct Plug-in of Estimates: Directly plugging in

-

Solution via Cross-Fitting: cross-fitting, where the prediction for observation

Relaxing Assumption 3: Non-constant treatment effects

Non-constant treatment effects occur when the impact of a treatment varies across different subgroups or individuals. This concept relaxes the assumption of homogeneous treatment effects, where the treatment is assumed to have the same impact on all units.

We could specify certain subgroups and run separate regressions for each subgroup and obtain different estimates of

Let’s define,

where

Idea: If we imagine we had access to some neighborhood

This is conceptually what Causal Forest does, it estimates the treatment effect

These weights play a crucial role, so how does grf 📦 find them?

Random forest as an adaptive neighborhood finder

Breiman’s random forest for predicting the conditional mean

- Building phase: Build

- Prediction phase: Aggregate each tree’s prediction to form the final point estimate by averaging the outcomes

Note that, this procedure is a double summation, first over trees, then over training samples (see equation (1)). We can swap the order of summation and obtain

Does above

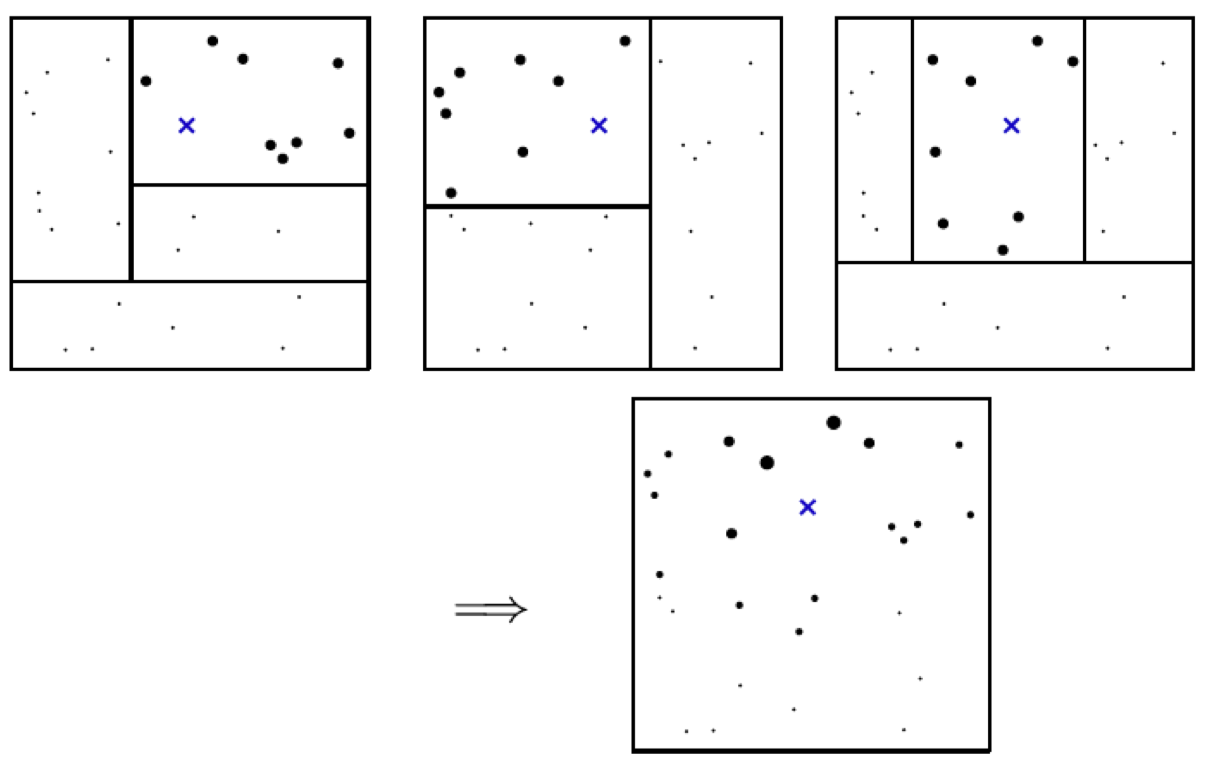

The following image illustrates how the

Causal Forest

Causal Forest essentially combines Breiman (2001) and Robinson (1988) by modifying the steps above to:

-

Building phase: Greedily places covariate splits that maximize the squared difference in subgroup treatment effects

-

Use the resulting forest weights

That is, Causal Forest is running a “forest”-localized version of Robinson’s regression. This adaptive weighting (instead of leaf-averaging) coupled with some other forest construction details known as “honesty” and “subsampling” can be used to give asymptotic guarantees for estimation and inference with random forests (Wager & Athey, 2018)

Efficiently estimating summaries of the CATEs

What about estimating summaries of

For estimating ATE, the most intuitive approach is to average the CATE, i.e.

Robins, Rotnitzky & Zhao (1994) showed that the so-called Augmented Inverse Probability Weighted (AIPW) estimator is asymptotically optimal for

where

To interpret the AIPW estimator

-

-

-

The AIPW estimator utilizes propensity score weighting on the residuals to debias the direct estimate

-

One key property of the AIPW estimator is its “double robustness”, which means that the estimator remains consistent and asymptotically normal even if either the outcome model or the propensity score model is misspecified. For proof, please refer to Stefan Wager’s Lecture 3 notes in “STATS 361: Causal Inference”.

The expression for above AIPW estimator can be rearranged and expressed as

We can understand above terms as:

-

-

-

-

References

-

Chernozhukov, Victor, Denis Chetverikov, Mert Demirer, Esther Duflo, Christian Hansen, Whitney Newey, and James Robins. 2018. “Double/Debiased Machine Learning for Treatment and Structural Parameters.” The Econometrics Journal 21 (1): C1–68. https://doi.org/10.1111/ectj.12097.

-

Athey, Susan, Julie Tibshirani, and Stefan Wager. 2019. “Generalized Random Forests.” The Annals of Statistics 47 (2): 1148–78. https://doi.org/10.1214/18-AOS1709.

-

Robinson, Peter M. “Root-N-consistent semiparametric regression.” Econometrica: Journal of the Econometric Society (1988): 931-954.

-

Wager, Stefan, and Susan Athey. “Estimation and inference of heterogeneous treatment effects using random forests.” Journal of the American Statistical Association 113.523 (2018): 1228-1242.

-

Wager, S. (2022). STATS 361: Causal Inference Lecture notes. Stanford University. https://web.stanford.edu/~swager/stats361.pdf

-

Chernozhukov, V., Newey, W. K., & Singh, R. (2022). Automatic Debiased Machine Learning of Causal and Structural Effects. Econometrica, 90(3), 967–1027. https://doi.org/10.3982/ECTA18515