Notes on Matrix Completion Methods for Causal Panel Data Models

Here are my study notes on the matrix completion method for causal panel data models proposed by Athey et al. (2021).

1. Setup and Notation

-

-

-

Rows (

-

Columns (

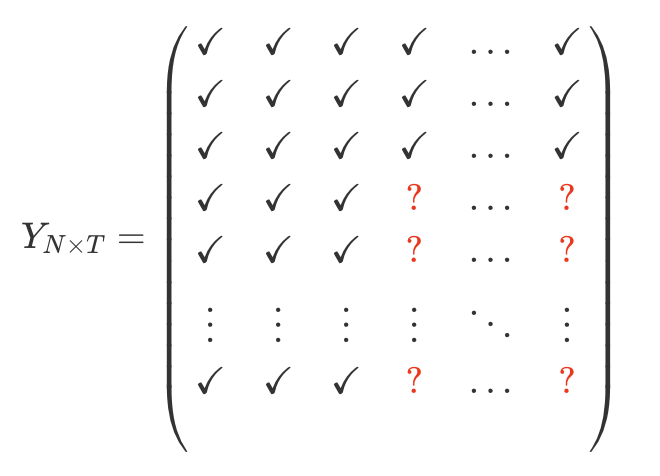

Objective: To impute the missing potential outcomes in

-

From now on, I drop from here on the

-

I will simply use

-

This is now a matrix completion problem.

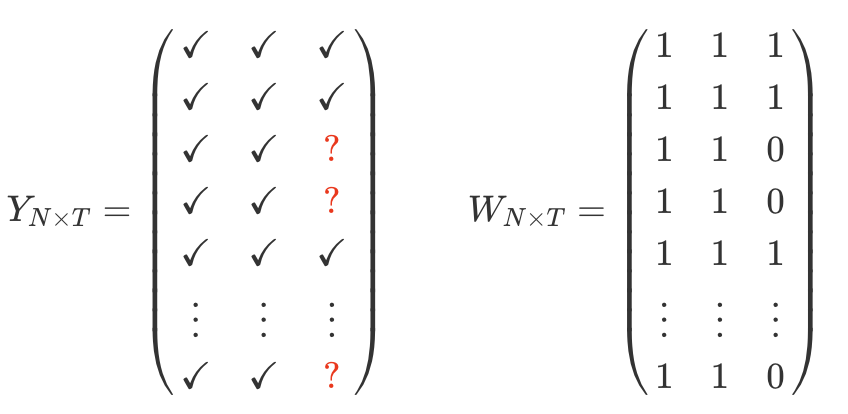

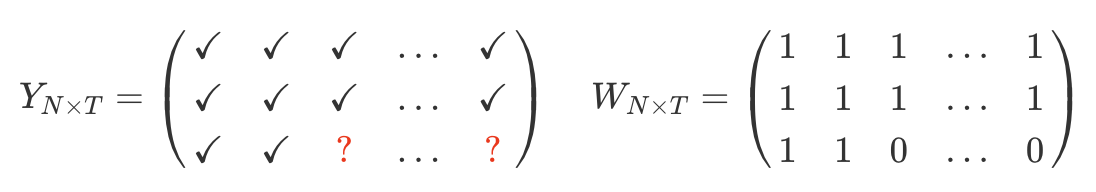

For illustration, let’s consider the structure on the missing data to be block structure, with a subset of the units adopting an irreversible treatment at a particular point in time

-

Let

-

Let

-

The causal parameter of interest is the treatment effect on treated (ATT):

2. Horizontal / Unconfoundedness Regression

The unconfoundedness literature operates on the concept that “history is a guide to the future.” As such, unconfoundedness methods express outcomes in the treated period as a weighted composition of outcomes in the pretreatment periods (Shen et al. 2023).

The unconfoundedness literature focuses on:

-

The single-treated-period structure with a thin matrix

-

A large number of treated and control units

- Impute the missing potential outcomes using control units with similar lagged outcomes

In this setting, we have the following assumptions.

Idea: If conditional on the pre-treatment outcomes, the treatment assignment is as good as random, then we can use flexible control methods (e.g. doubly robust method).

The horizontal or unconfoundedness type of regression is to (1) regress the last period outcome on the lagged outcomes and (2) use the estimated regression to predict the missing potential outcomes:

-

-

Predict

For the units with

More specifically, it assumes there is relation between outcomes in treated period and pre-treatment periods that is the same for all units. Note:

A more flexible, nonparametric, version of this estimator would correspond to matching,

- For each treated unit

If

3. Vertical Regression and Synthetic Control

The synthetic controls literature is built on the concept that “similar units behave similarly.” Therefore, synthetic controls methods express the treated unit’s outcomes as a weighted composition of control units’ outcomes (Shen et al. 2023).

Synthetic control methods focus on:

-

The single-treated-unit block structure with a fat

-

A large number of pre-treatment periods (i.e.

-

In this setting, the identification depends on the assumption that:

Previous studies1 show how the synthetic control method can be interpreted as regressing the outcomes for the treated unit prior to the treatment on the outcomes for the control units in the same periods.

For the treated unit in period

where

This is referred to as vertical regression.

More specifically, it assumes there is relation between different units that is stabale over time. Note:

A more flexible, nonparametric, version of this estimator would correspond to matching,

- For each post-treatment period

If

4. Panel with Fixed Effects

Before move to fixed effect, factor and interactive mixed effect models, let’s do a short summary.

Horizontal regression:

-

Data: Thin matrix (many units, few periods), single treated period (period

-

Strategy: Use controls to regress

-

-

-

-

Does not work well if

-

Key identifying assumption:

The horizontal regression focuses on a pattern in the time path of the outcome

Vertical regression

-

Data: Fat matrix (few units, many periods), single treated unit (unit

-

Strategy: Use pretreatment periods to regress

-

-

-

-

Does not work well if matrix is thin (many units)

-

Key identifying assumption:

The vertical regression focuses on a pattern between units at times when we observe all outcomes, and assumes this pattern continues to hold for periods when some outcomes are missing.

However, by focusing on only one of these patterns, cross-section or time series, these approaches ignore alternative patterns that may help in imputing the missing values. One alternative is to consider approaches that allow for the exploitation of stable patterns over time and stable patterns across units. Such methods have a long history in panel data literature, including the two-way fixed effects, and factor and interactive mixed effect models.

In the absence of covariates, the common two-way fixed effect model is:

In this setting, the identification depends on the assumption that:

So the predicted outcome based on the unit and time fixed effects is:

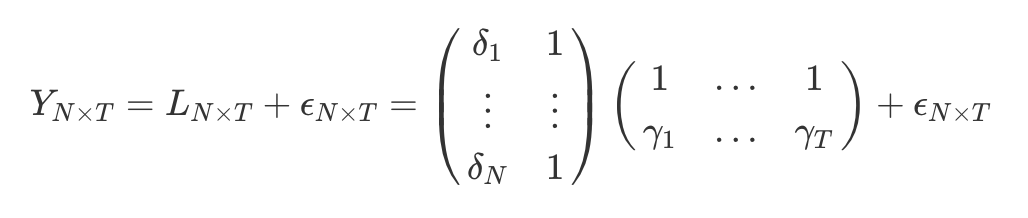

In a matrix form, the model can be rewritten as:

The matrix formulation of the identification assumption is:

5. Interactive Fixed Effects

5.1 Why Use Interactive Fixed Effects?

In panel data, traditional fixed effects control for differences across units and time periods. But what if there are hidden factors that evolve over time and affect each unit differently — like how each state reacts differently to national economic trends?

Classical models can’t handle that. This is where interactive fixed effects shine: they model these latent time-varying confounders using a flexible structure,

5.2 Interactive Fixed Effects Model

For interactive fixed effects, instead of exploiting additive structure in unit and time effects, we exploit the low rank or interactive structure of unit and time fixed effects for panel data regression.

The common interactive fixed effect model is:

where

-

- Note: Factors are unobserved, time-varying variables that capture common influences or shocks affecting all units at time

- Note: Factors are unobserved, time-varying variables that capture common influences or shocks affecting all units at time

-

- Note: Factor loadings are unobserved, unit-specific coefficients that determine how much each unit

- Note: Factor loadings are unobserved, unit-specific coefficients that determine how much each unit

Note that both factors and factor loadings are parameters that need to be estimated. Typically it is assumed that the number of factors

Again, the identification depends on the assumption that:

The predicted outcome based on the interactive fixed effects would be:

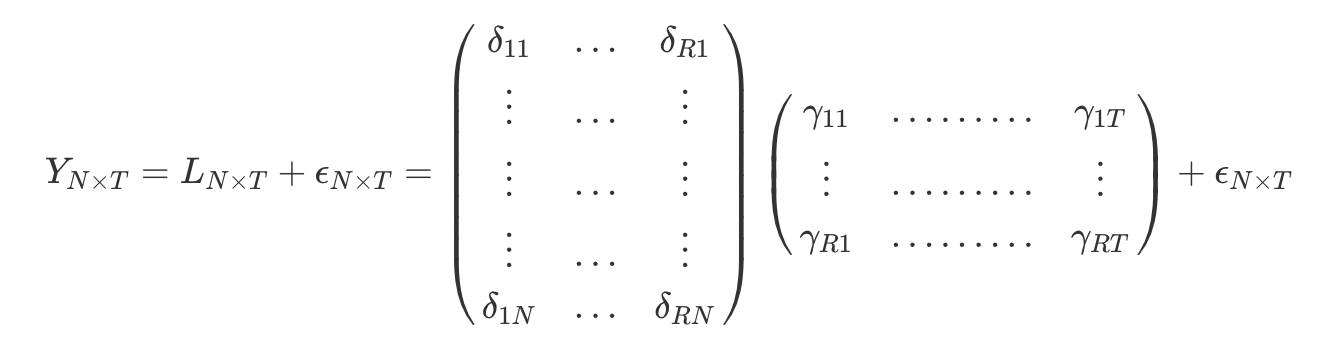

In a matrix form, the

The matrix formulation of the identification assumption is:

Using the interactive fixed effects model, we would estimate

6. The Matrix Completion with Nuclear Norm Minimization Estimator

In the absence of covariates, the

2. There exists staggered entry relating to the weights such that

3.

In a more general case, Y_{i t}$ is equal to:

where

-

-

-

-

-

We do not necessarily need the fixed effects

With too many parameters, especially for

But how do we regularize

By singular value decomposition (SVD), we have

where

-

-

-

Rank of

There are three ways to regularize

-

Frobenius norm imputes missing values as 0

-

Rank norm is computationally not feasible for general missing data patterns

-

The preferred Nuclear norm leads to low-rank matrix and is computationally feasible.

So the Matrix-Completion with Nuclear Norm Minimization (MC-NNM) estimator uses the nuclear norm:

For the general case, we estimate

And we choose

Reference

Athey, Susan, Mohsen Bayati, Nikolay Doudchenko, Guido Imbens, and Khashayar Khosravi (2021), “Matrix Completion Methods for Causal Panel Data Models,” Journal of the American Statistical Association, 116 (536), 1716–30.

Liu, Licheng, Ye Wang, and Yiqing Xu (2024), “A Practical Guide to Counterfactual Estimators for Causal Inference with Time-Series Cross-Sectional Data,” American Journal of Political Science, 68 (1), 160–76.

Shen, Dennis, Peng Ding, Jasjeet Sekhon, and Bin Yu (2023), “Same Root Different Leaves: Time Series and Cross-Sectional Methods in Panel Data,” Econometrica, 91 (6), 2125–54.

Chapter 8 Matrix Completion Methods | Machine Learning-Based Causal Inference Tutorial

7.1 Simple Exponential Smoothing | Forecasting: Principles And Practice (2nd Ed)

Bai, Jushan (2009), “Panel Data Models With Interactive Fixed Effects,” Econometrica, 77 (4), 1229–79.

R packages 📦 to implement:

- https://github.com/susanathey/MCPanel (matrix completion)

- https://github.com/synth-inference/synthdid (synthetic difference i differences)

- https://github.com/ebenmichael/augsynth (augmented synthetic control)