Beta Distribution — Intuition, Derivation, and Examples

Motivation

Model probabilities

The Beta distribution is a probability distribution on probabilities.

The Beta distribution can be understood as representing a distribution of probabilities, that is, it represents all the possible values of a probability when we don’t know what that probability is. For example,

-

the Click-Through Rate of your advertisement

-

the conversion rate of customers actually purchasing in your store

-

how likely the customer will become “inactive”

Because the Beta distribution models a probability, its domain is bounded between 0 and 1.

Generalization of Uniform Distribution

Give me a continuous and bounded random variable except the Uniform Distribution. This is another way to look at beta distribution, continuous and bounded between 0 and 1; also the density is not flat.

What is

Conjugate Prior

The Beta distribution is the conjugate prior for the Bernoulli, binomial, negative binomial and geometric distributions (seems like those are the distributions that involve success & failure) in Bayesian inference.

Computing a posterior using a conjugate prior is very convenient, because you can avoid expensive numerical computation involved in Bayesian Inference.Conjugate prior = Convenient prior

For example, the beta distribution is a conjugate prior to the binomial. If we choose to use the beta distribution Beta(α, β) as a prior, during the modeling phase, we already know the posterior will also be a beta distribution. Therefore, after carrying out more experiments, you can compute the posterior simply by adding the number of successes (x), and failures (n-x) to the existing parameters α, β respectively, instead of multiplying the likelihood with the prior distribution. The posterior also becomes a Beta distribution with parameters (x+α, n-x+β).

What is the Intuition?

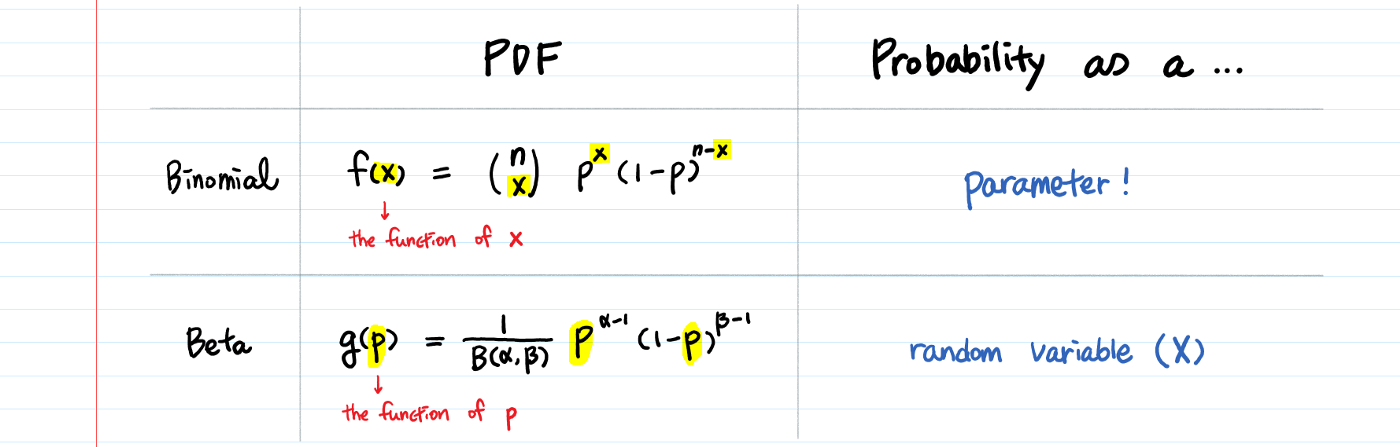

The intuition for the beta distribution comes into play when we look at it from the lens of the binomial distribution.

The difference between the binomial and the beta is that the former models the number of successes (x), while the latter models the probability (p) of success.

In other words, the probability is a parameter in binomial; In the Beta, the probability is a random variable.

Interpretation of α, β

You can think of α-1 as the number of successes and β-1 as the number of failures, just like n & n-x terms in binomial.

You can choose the α and β parameters however you think they are supposed to be.

- If you think the probability of success is very high, let’s say 90%, set 90 for α and 10 for β.

- If you think otherwise, 90 for β and 10 for α.

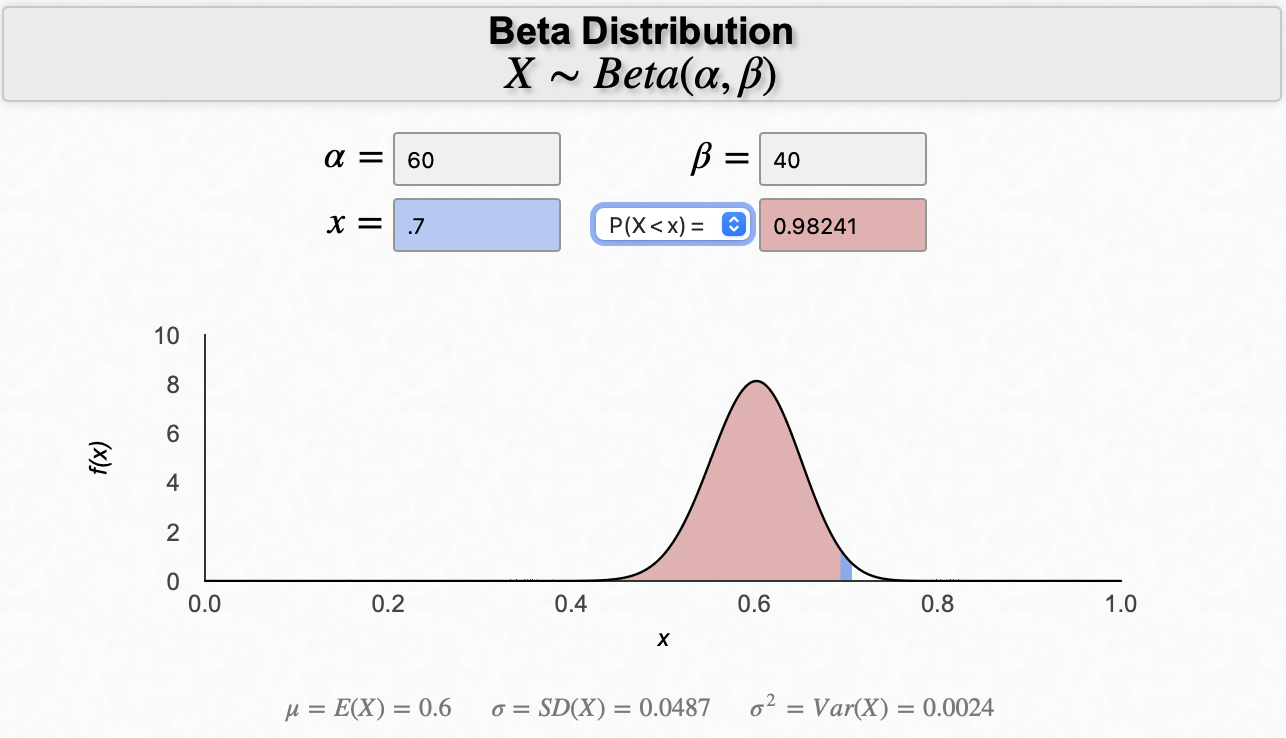

As α becomes larger (more successful events), the bulk of the probability distribution will shift towards the right, whereas an increase in β moves the distribution towards the left (more failures).

Also, the distribution will narrow if both α and β increase, for we are more certain.

Dr. Bognar at the University of Iowa built the calculator for Beta distribution, which I found useful and beautiful. You can experiment with different values of α and β and visualize how the shape changes.

Derivation

In this section, we’ll derive Beta distribution using the Beta-Gamma Connections.

Fraction of Waiting Time

Let

Let

Assume

Solution:

Let

Let

The idea is to find the joint PDF

Integrating

Here we have Beta,

Beta Function as a normalizing constant

Note that,

So the normalization constant should be,

Mean of Beta distribution

As a byproduct in the above derivation, we get that fact that W and T are independent. Then, we can use this to derive the mean of Beta distribution,

rearrange to get,

This result is clear Not True in general, but under our setting, we have this interesting result.

We can use this result to find the mean of

Getting Beta parameters in practice

The Beta distribution is the conjugate prior for many common distributions. We use it a lot. But in practice, how to figure out its parameters? It is sometimes useful to estimate quickly the parameters of the Beta distribution using the method of moments:

Here is the R code:

# calculate beta params using method of moments

cal_beta_params <- function(meanX, sdX) {

varX <- sdX^2

sum_ab <- meanX * (1 - meanX) / varX - 1

a <- sum_ab * meanX

b <- sum_ab * (1 - meanX)

# return

c("shape" = a, "scale" = b)

}

# example

cal_beta_params(meanX = 0.136, sdX = 0.103)

## shape scale

## 1.370320 8.705559

Summary

To summarize, the bank–post office story tells us that: when we add independent Gamma r.v.s

-

the total

-

the fraction

-

the total is independent of the fraction.

Examples

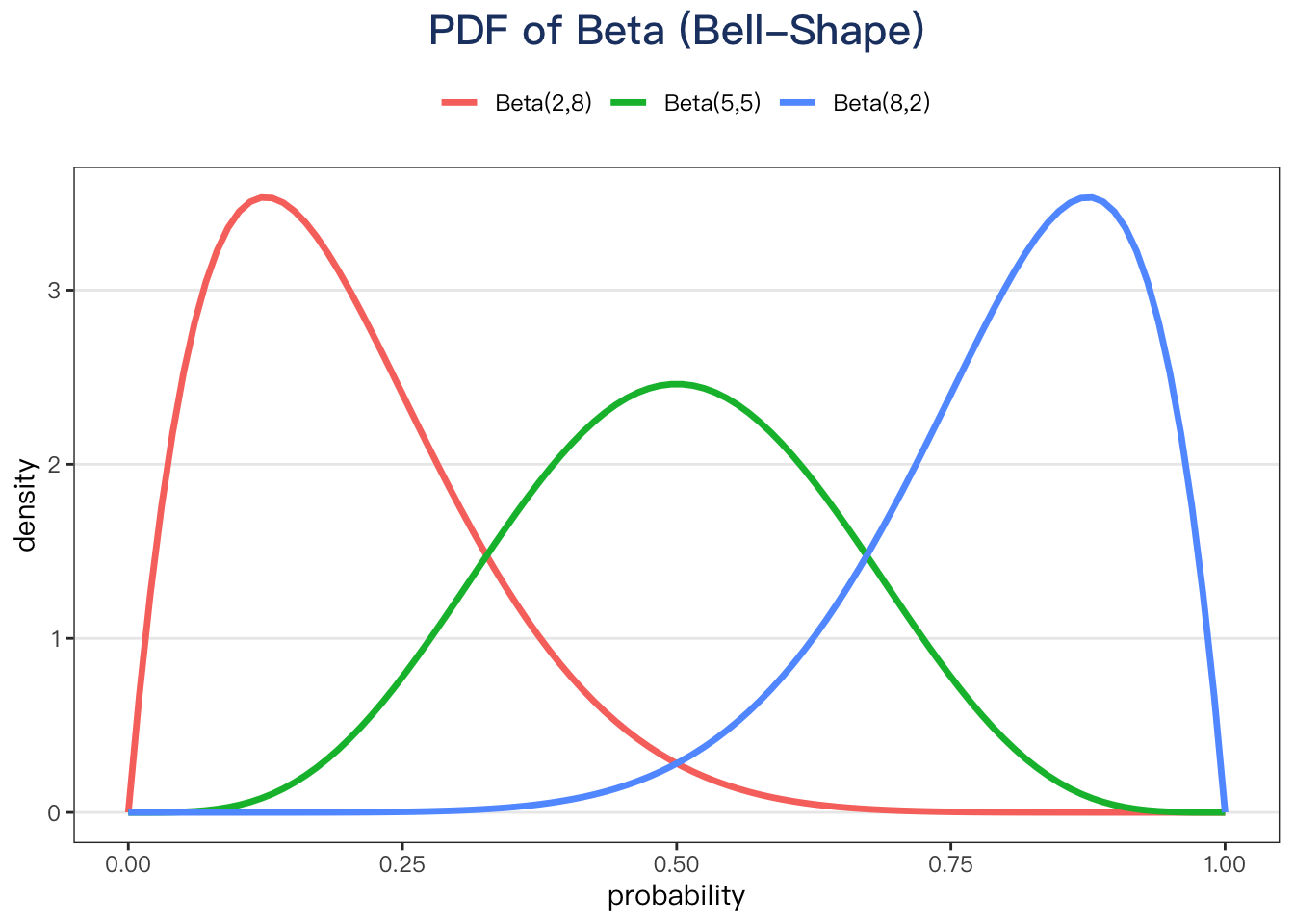

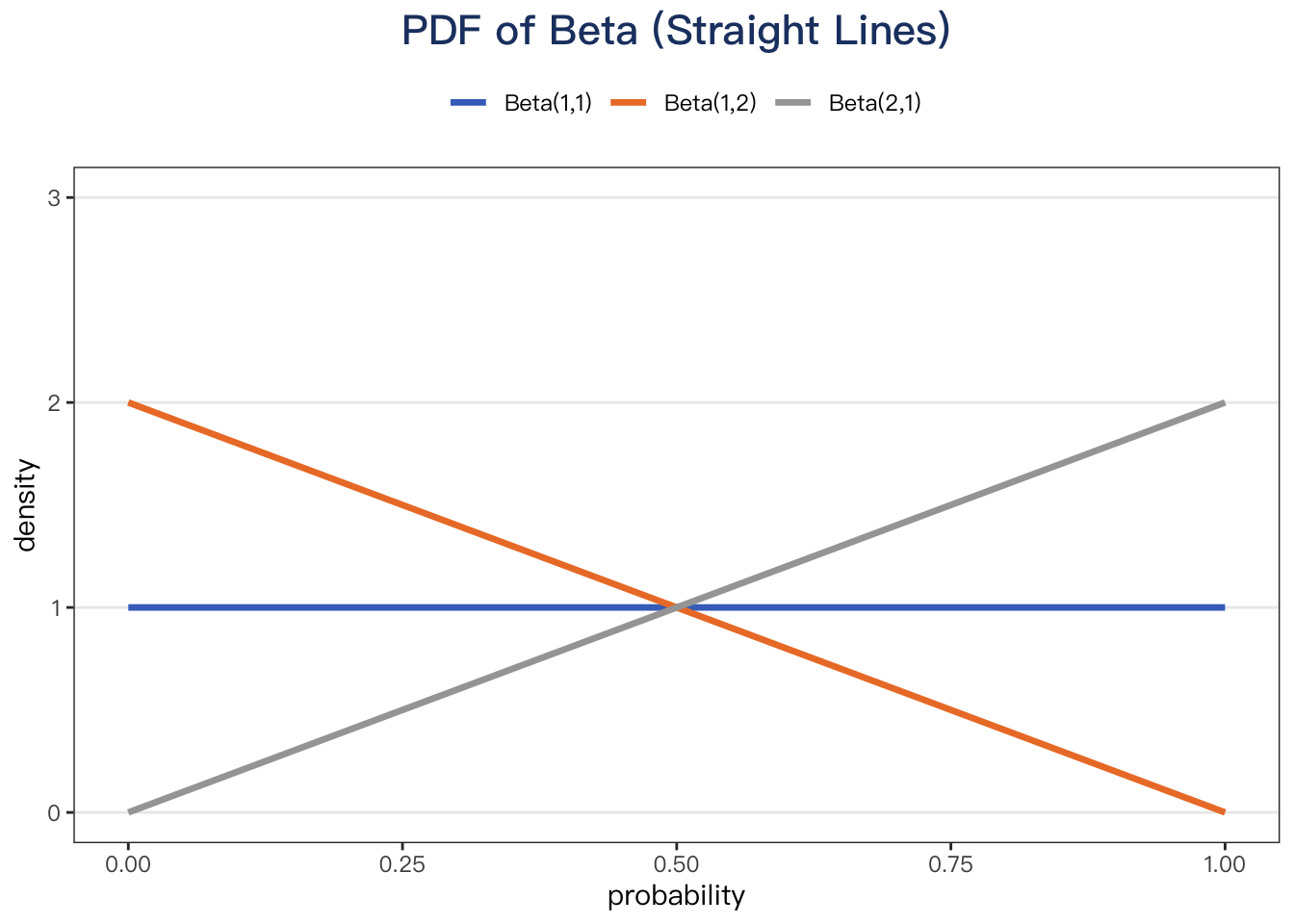

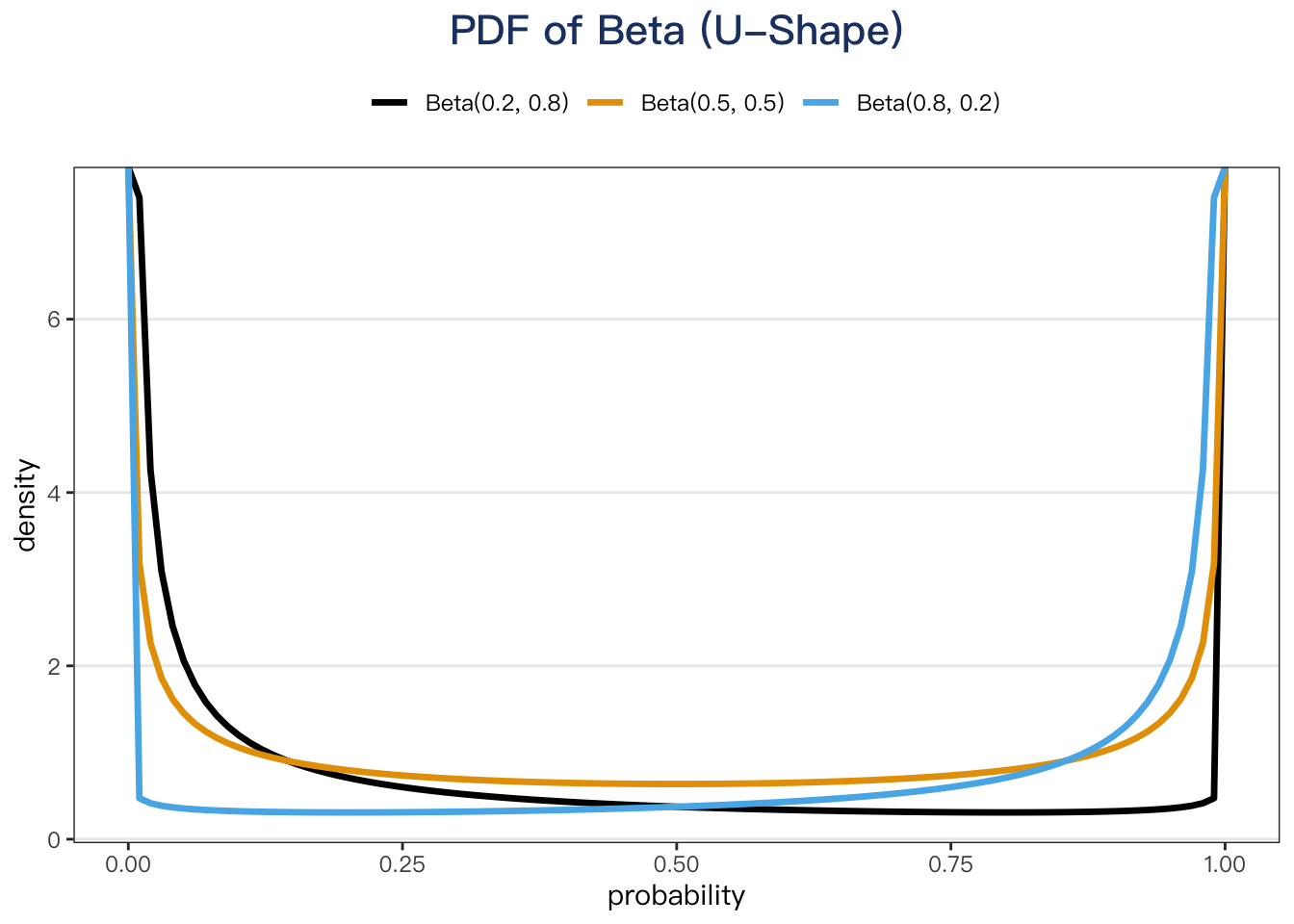

The PDF of Beta distribution can be U-shaped with asymptotic ends, bell-shaped, strictly increasing/decreasing or even straight lines. As you change α or β, the shape of the distribution changes.

I. Bell-Shape

The PDF of a beta distribution is approximately normal if α + β is large enough and α & β are approximately equal.

Intuition behind Bell-Shape

Why would Beta(2,2) be bell-shaped?

If you think:

-

α-1 as the number of successes

-

β-1 as the number of failures

-

Beta(2,2) means you got 1 success and 1 failure

So it makes sense that the probability of the success is highest at 0.5.

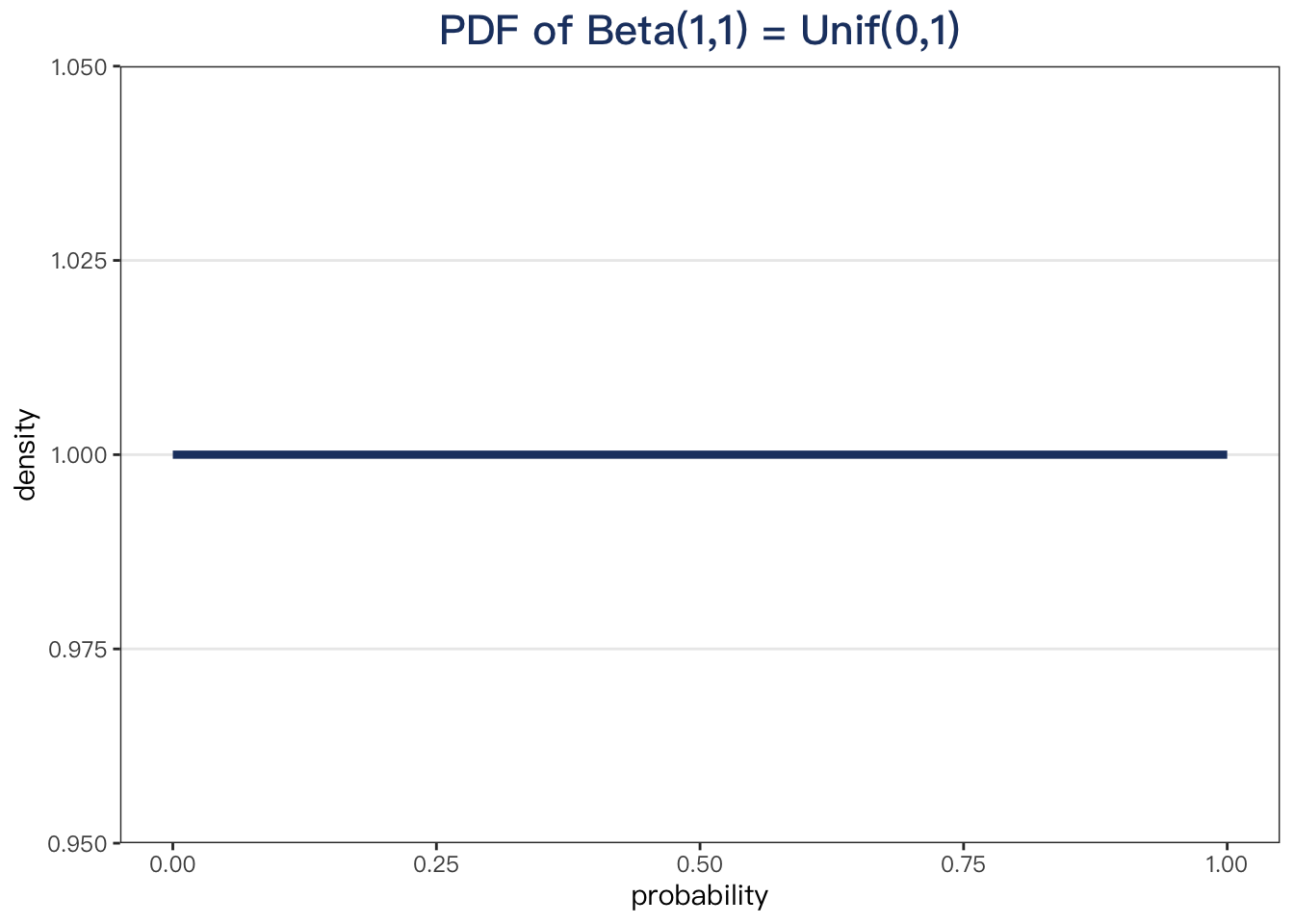

Also, Beta(1,1) would mean you got zero for the head and zero for the tail. Then, your guess about the probability of success should be the same throughout [0,1]. The horizontal straight line confirms it.

II. Straight Lines

α = 1 or β = 1, the beta PDF can be a straight line.

III. U-Shape

When

When