Notes on Longoni et al (2019) – Resistance to Medical AI

Paper: “Resistance to Medical Artificial Intelligence,” Journal of Consumer Research, 46 (4), 629–50.

Main Topic or Phenomenon

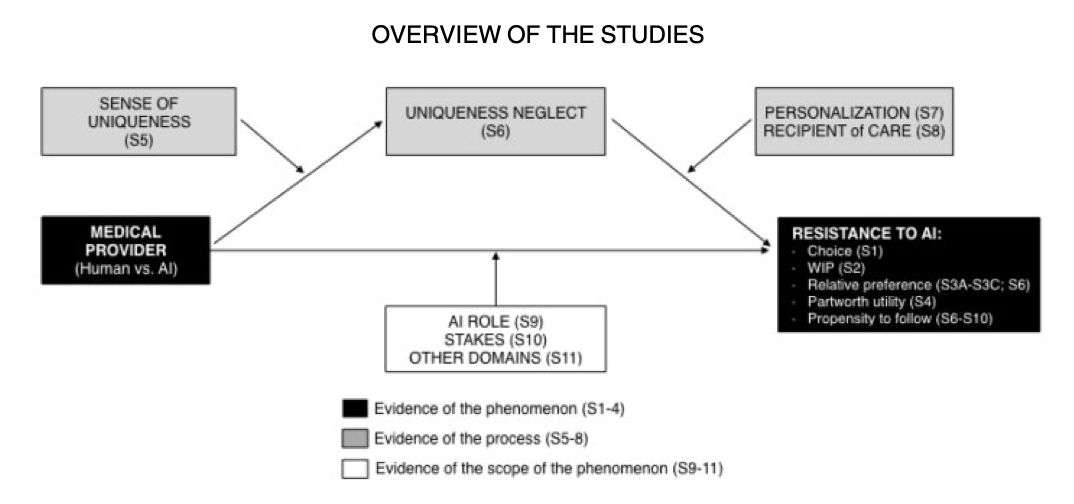

This paper examines consumer resistance to artificial intelligence (AI) in healthcare settings. The authors investigate why consumers are reluctant to utilize medical care delivered by AI providers compared to human providers, even when AI demonstrates equal or superior performance. The phenomenon spans across prevention, diagnosis, and treatment decisions in healthcare.

Theoretical Construct

Uniqueness Neglect: The central theoretical construct is uniqueness neglect, defined as consumers’ concern that AI providers are less able than human providers to account for their unique characteristics, circumstances, and symptoms. This emerges from a mismatch between two fundamental beliefs:

- Consumers view themselves as unique and different from others

- Consumers view machines as operating in a standardized, rote manner that treats every case the same way

The construct builds on the “broken leg hypothesis” from clinical judgment literature, which suggests people distrust statistical models because they fear key evidence might be omitted.

Key Findings

- Consistent resistance pattern: Across 11 studies, consumers show reluctance to utilize healthcare from AI vs. human providers, evidenced by lower utilization rates, reduced willingness to pay, and decreased sensitivity to performance differences.

- Performance doesn’t eliminate resistance: Even when AI providers demonstrate superior performance to human providers, consumers still prefer human providers.

- Uniqueness neglect mediates resistance: The concern that AI cannot account for one’s unique medical characteristics fully explains the preference for human providers over alternative mechanisms like responsibility offloading or humanness perceptions.

- Individual differences matter: Consumers who perceive themselves as more unique show stronger resistance to medical AI.

- Resistance is context-dependent: Resistance diminishes when care is framed as personalized, when deciding for “average others” rather than oneself, or when AI supports rather than replaces human decision-makers.

Boundary Conditions and Moderators

Personalization: When AI care is explicitly described as “personalized and tailored to your unique characteristics,” resistance disappears. This suggests consumers assume human care is inherently personalized but AI care is not.

Decision target: Resistance to medical AI emerges when deciding for oneself or unique others, but not when deciding for an “average person.” This supports the uniqueness mechanism.

AI role: Consumers accept AI when it supports physician decision-making but resist when AI replaces the physician as the primary decision-maker.

Medical stakes: Preliminary evidence suggests resistance is stronger for high-stakes vs. low-stakes medical decisions.

Self-perceived uniqueness: Higher individual levels of perceived personal uniqueness amplify resistance to medical AI.

Building on Previous Work

The paper extends the clinical vs. actuarial judgment literature (originating with Meehl 1954) from non-medical domains into healthcare. While previous research documented that people prefer human over statistical judgments despite inferior performance, this work:

- Provides the first systematic examination of consumer (vs. physician) preferences for medical AI

- Identifies a novel psychological mechanism (uniqueness neglect) beyond previously speculated drivers

- Demonstrates the phenomenon persists even with explicit performance information

- Tests across prevention, diagnosis, and treatment contexts

The work challenges the assumption that superior AI performance alone will drive adoption, showing psychological barriers persist regardless of objective capabilities.

Major Theoretical Contribution

The paper makes three primary theoretical contributions:

- Domain extension: Extends automation psychology research into medical consumer decision-making, an important but understudied domain with unique characteristics (high stakes, uncertainty, unfamiliarity).

- Mechanism identification: Introduces uniqueness neglect as a novel psychological driver of resistance to statistical judgments, providing empirical support for the long-speculated “broken leg hypothesis.”

- Medical decision-making advancement: Identifies uniqueness concerns as a previously unexplored psychological mechanism influencing medical choices, with potential applications beyond AI contexts.

Major Managerial Implication

Healthcare providers and AI developers should focus on demonstrating personalization capabilities to overcome consumer resistance. Specific recommendations include:

- Collect and highlight extensive individual patient information

- Frame AI recommendations as “based on your unique profile”

- Implement hybrid models where physicians retain final decision authority while AI provides support

- Emphasize how AI accounts for individual patient characteristics rather than treating everyone identically

The findings suggest that technical superiority alone is insufficient for AI adoption; addressing psychological barriers through personalization framing is crucial.

Unexplored Theoretical Factors

Several potential moderators and mechanisms remain unexplored:

Individual difference factors: Risk tolerance, need for control, technology anxiety, health locus of control, medical expertise/knowledge, or cultural values around healthcare relationships.

Contextual factors: Healthcare setting (hospital vs. clinic vs. home), urgency of decision, availability of second opinions, cost considerations, or social influence from family/peers.

AI characteristics: Transparency of AI decision-making process, explanation quality, learning/adaptation capabilities, or anthropomorphic features.

Temporal factors: Experience with AI over time, generational differences, or how resistance changes as AI becomes more prevalent.

Process factors: Level of patient involvement in data input, feedback mechanisms, or ability to customize AI parameters.

Emotional factors: Anxiety about medical decisions, trust in technology, or emotional comfort needs during healthcare interactions.

Reference

Longoni, Chiara, Andrea Bonezzi, and Carey K Morewedge (2019), “Resistance to Medical Artificial Intelligence,” Journal of Consumer Research, 46 (4), 629–50.