Notes for Variational Inference

Introduction

In modern Bayesian statistics, we often face posterior distributions that are difficult to compute. Let

- Slow for big datasets or complex models.

- Difficult to scale in the era of massive data.

Variational inference (VI) offers a faster alternative. The key difference between MCMC and VI is:

- MCMC sample a Markov chain

- VI solve an optimization problem

The main idea behind VI is to use optimization. Specifically,

-

step 1: we posit a family of densities

-

step 2: find a member in

Key Idea: Rather than sampling, VI optimizes — it finds a best guess distribution by minimizing a divergence.

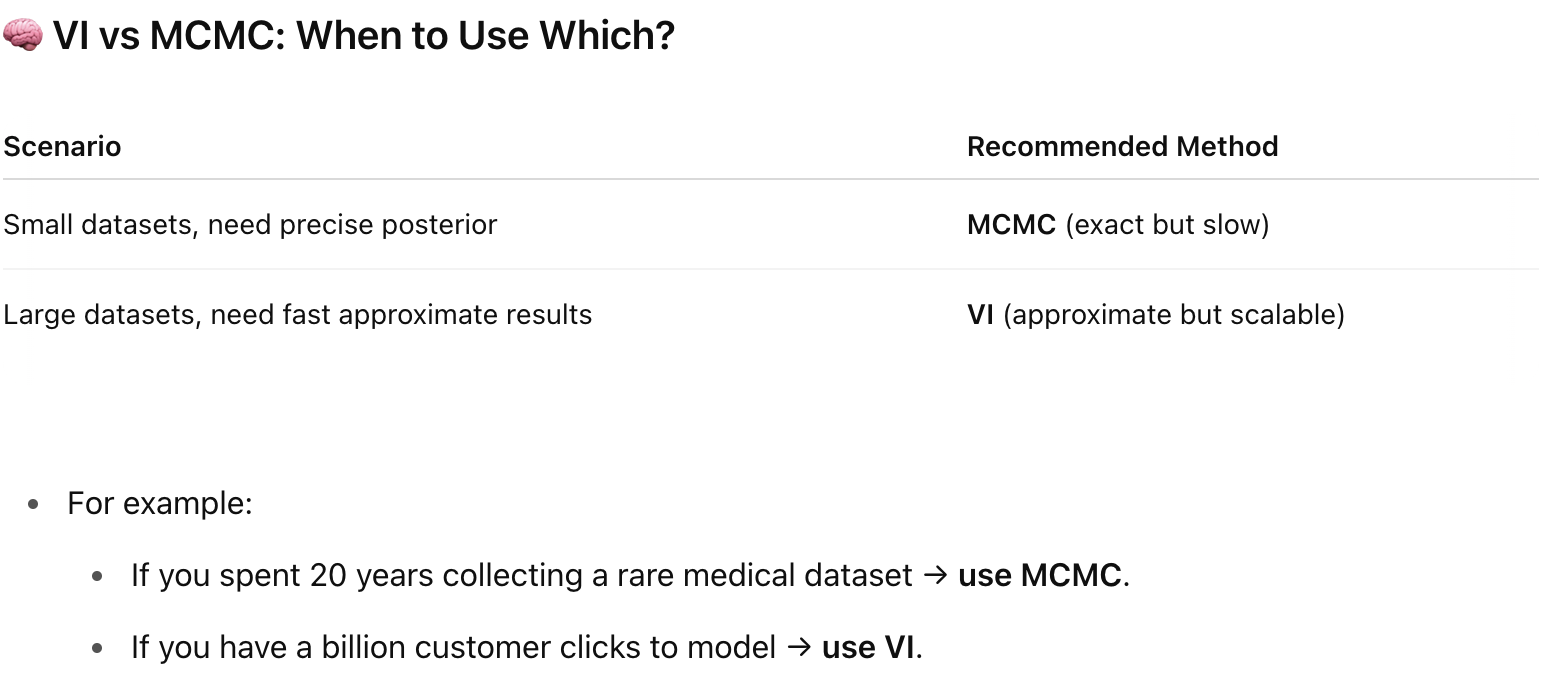

VI vs MCMC: When to Use Which?

Pros and Cons of VI

-

Pros:

- Much faster than MCMC.

- Easy to scale with stochastic optimization and distributed computation.

-

Cons:

- VI underestimates posterior variance (it tends to be “overconfident”).

- It does not guarantee exact samples from the true posterior.

Variational Inference

Recall that in the Bayesian framework,

Variational inference turns Bayesian inference into an optimization problem by minimizing KL divergence within a simpler family of distributions, typically using coordinate ascent to maximize the evidence lower bound (ELBO).

First, the optimization goal is

where

Note that,

This value is called evidence lower bound because it is the lower bound of “log evidence”.

The second line holds because

Therefore, maximizing ELBO is equivalent to minimizing KL divergence.

What is the intuition for

-

first term is try to “maximize the likelihood”

-

second term is try to encourage density

-

balance between likelihood and prior

Mean-Field Variational Family

In mean field variational inference, we assume that the variational family factorizes,

Coordinate ascent algorithm

We will use coordinate ascent inference, iteratively optimizing each variational distribution holding the others fixed.

The ELBO converges to a local minimum. Use the resulting

There is a strong relationship between this algorithm and Gibbs sampling.

-

In Gibbs sampling we sample from the conditional

-

In coordinate ascent variational inference, we iteratively set each factor to

Reference

-

Blei, D. M., Kucukelbir ,Alp, & and McAuliffe, J. D. (2017). Variational Inference: A Review for Statisticians. Journal of the American Statistical Association, 112(518), 859–877. https://doi.org/10.1080/01621459.2017.1285773

-

Looking for a nice summary? Check this first: Variational Inference - Princeton CS tutorial

-

For derivation details, check this: Introduction to Variational Inference